How to Download File using Command Line from any Website (cliget / CurlWget)

There are gonna be a lot of cases to use CLI instead of GUI. One of which is when we trying to download multiple/large-size files from a website. There many problems that can arise while we’re doing it e.g (Unstable Internet Speed, Limited Bandwidth, Browser Crash, etc). This method can be very useful if we have a spare server with unmetered bandwidth, we can use the server to download the files then copy them, it will save our time and effort rather than download them manually from our computer.

There are many command-line tools for data transfer/download e.g (curl, wget, aria2, etc) but in this article, we will only cover curl and wget consider it is the most popular one.

- curl : Command line tool and library for transferring data with URLs.

- wget : Software package for retrieve files using HTTP, HTTPS, FTP and FTPS.

But chances are, some of the websites have a private file protected by cookies and sessions e.g (Digital Download, Backups, Corporate Files, etc). In that case, we need a browser extension that can generate curl/wget command with the included cookies and session. By using this extension then it will be easier for us to just copy the command and run it through our server. The extension that I’ve been referring to are :

- cliget : a Firefox extension to auto-generate download command (curl, wget, aria2) for login protected files

- curlwget : a Chrome extension to auto-generate download command (curl, wget) for login protected files

You can download these extensions based on your preferred browser.

How to use the extensions

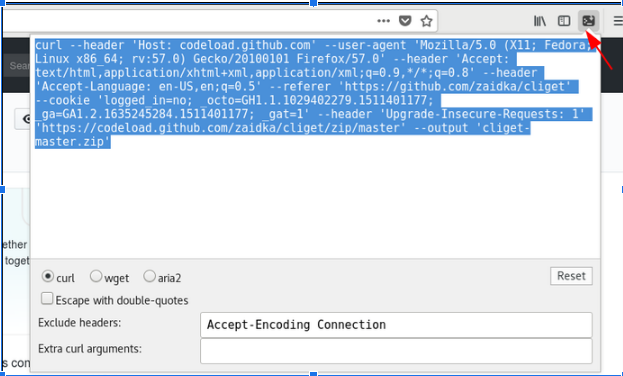

cliget

- Firstly, you’ll have to download files normally by clicking the download link from a website. Then hit cancel to abort the downloading process.

- Then you can click the extension at the very right corner of your browser window. There are 3 options available (curl, wget, & aria2)

- Copy the command string into the server, but don’t forget to set the location where the file you want to be downloaded

e.gcd /home/ubuntu/downloads

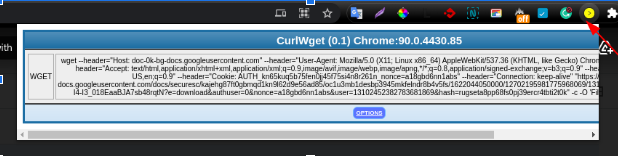

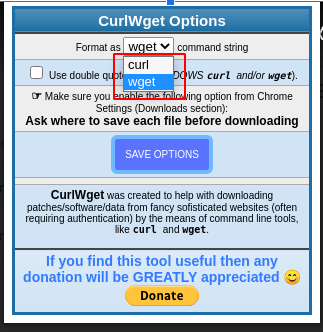

CurlWget

- Firstly, you’ll have to download files normally by clicking the download link from a website. Then hit cancel to abort the downloading process.

- Then you can click the extension at the very right corner of your browser window. There are 2 options available (curl & wget)

- NOTE : When you first install, it may be asked to choose which command string format will be used as the default.

- Copy the command string into the server, but don’t forget to set the location where the file you want to be downloaded

e.gcd /home/ubuntu/downloads

How to run the command from Windows/Linux/Mac

The easiest answer is to just copy and paste the command into the server terminal. Although most of the tools already come with the OS, if it’s not then you can install them manually by following these steps :

Linux

In the Linux server environment, we can use built-in package managers like e.g (apt, snap, yum, etc) to install the tools. Here is the command we can use to install wget :

apt install wget

Unix – Mac OS (Homebrew)

In the mac os server, there is a package manager called homebrew which is widely used by developers to set up a development environment. Here are step by step guide to install wget with homebrew :

- Download & install homebrew by running

ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)"

- After homebrew installed we can start installing

wgetby running

brew install wget

How to make the download a daemon process

A daemon (also known as background processes) is a Linux or Unix program that runs in the background. We might want to consider changing the download process into a daemon it will be easier to maintain and also we can use the server session for other things.

If you want to make the download as a daemon you can simply add & at the end of the download command. It will be look something like this :

curl -O https://example.com/backup-project-wp.zip &

There are some cases when u want to log the download process. You can log the download process into a file by adding > download.txt &

curl -O https://example.com/backup-project-wp.zip > downloda.txt &

How to see the progress of the download

Before you leave the download process in the server, you will need to test the command first whether it’s working or not. Also, after the command being executed we might also want to see the download process regularly to see whether it actually downloading the files or not. One of the command that we can use to get the list of download process is :

ps -ax | grep curl

How to test file integrity for archived/compressed files

The last thing to do, after the server finished downloading the files is to check file integrity. For a Linux/Unix user, this can be easily done by using integrated tools within the command line e.g (tar, unzip, 7z, etc). Here are steps by steps on how to test file integrity using those tools :

Tar

The Tar (Tape Archive) command-line tool is one of the most popular ones to work with compressed files. It allows us to compressed & decompressed files using an archive format e.g (tar, gzip, bzip). Here is the command we can use to test file integrity using the tar command :

tar -tzf my_tar.tar.gz > /dev/null

Unzip

Aside from tar, we can also use unzip to test file integrity. Although it is not available by default for most Linux environments we can easily install it using the built-in package manager like (homebrew, apt, snap, yum, etc). You can refer to this article on how to install it in your system. Here is the command we can use to test file integrity using the unzip command :

unzip -t my_files.zip

Unrar

Unrar is also one of the most popular ones when dealing with compressed files it supports .rar format. You can refer to this article on how to install it in your system. Here is the command we can use to test file integrity using unrar command :

unrar t my_files.rar